PC Virtual Reality, IOS, Android, Quest

October 25, 2021

Unreal Engine/ Blueprints/ Material Shaders/ Niagara/ Blender/ C++/ Adobe Suite/ Figma/ Perforce

The client wanted to explore what it would be like for a new officer to attend the scene of a burglary. For this I was responsible for iterating and refining a finger print dusting mechanic in which the player needed to search and finger print possible clues. This interaction needed to be ported to PC and mobile.

As well as that I helped developed a quick thinking communications center mini game where the player respond to information coming in from a caller and needed to respond to it in the right way

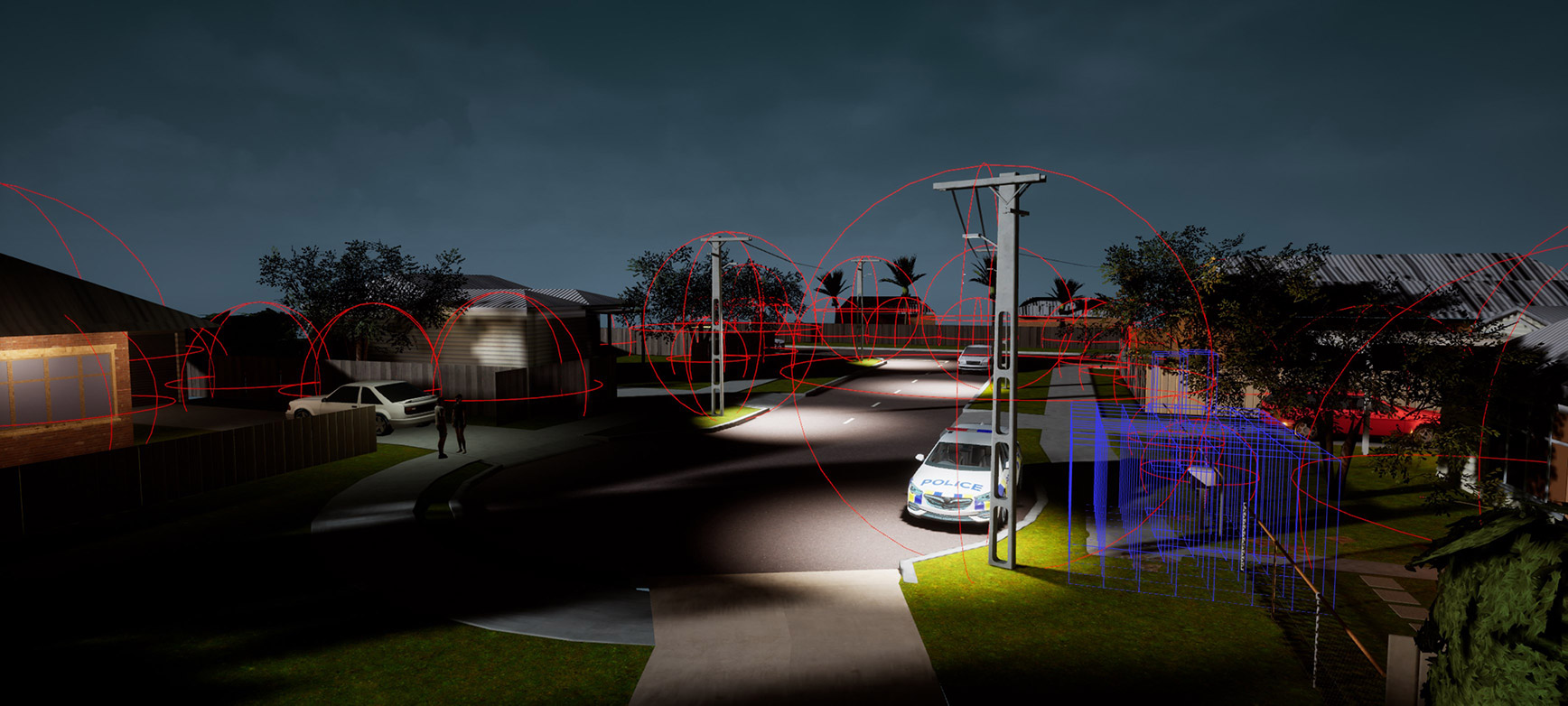

I leveraged the power of HLODs to optimize performance in this project. HLODs allowed for efficient management of distant objects by grouping actors that were near each other and shared materials. This significantly reduced the draw call count and helped to meet the performance limit of 150 draw calls on the Oculus Quest.

I utilized precomputed visibility volumes to optimize performance in the project. These volumes were placed around each teleport node and baked in the objects that would be within the camera's line of sight at any point within the volume. This was crucial as the Oculus Quest was unable to dynamically occlude objects that were within the camera frustum but hidden behind other objects. Without precomputed visibility volumes, the performance would have been impacted by rendering unseen objects. However, with the precomputed volumes in place, We were able to turn off objects that were not currently visible, thus increasing performance.

In this project, reducing shader complexity was a key focus for optimization. One of the major changes made was the removal of all transparent materials, as the Quest's rendering of translucency was very performance-intensive. Instead, I primarily used opaque materials and only used masked materials when necessary to keep instruction counts low. Fine tuning the light map resolution where necessary to make the baked lighting look as good as possible, also helped get the best visual result.

I implemented a platform-dependent level streaming function that optimized the loading of different models, effects, and lighting. For example, the foliage changed depending on the platform, with different trees being used to improve visual quality. The Quest had difficulties with masked materials against high-contrast backgrounds, so I changed the main tree to a sculptural alternative and adjusted lighting. This approach allowed for a better visual look while still optimizing performance

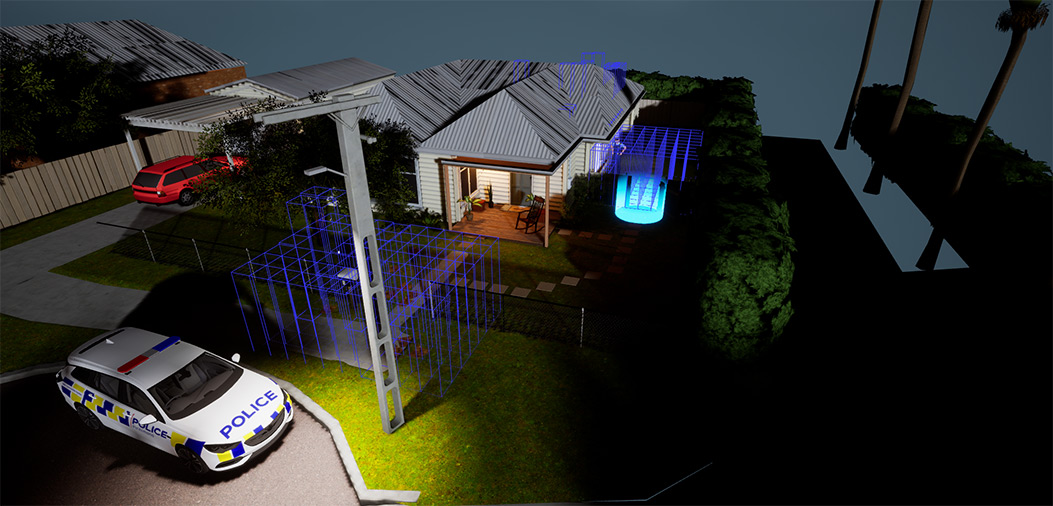

The guide dog, named Blue, originally used a transparent material and used a height based fade to transition in. Due to performance reasons we needed to change this to an opaque material. To mask the transition of the dog loading in I did a simple fade in effect using a cube grid particle system built in Niagara. I used a height based effect to scale in the particles and then loaded the dog guide in underneath

Within the dogs material we applied an effect to give him holographic scan lines making him feel like he was a hologram. We also used a world position offset to add subtle warping and jitter to the mesh. Using the normal's of the mesh we could dynamically give him a glow underneath.

I integrated the dog into the desk using a world position spherical mask. I also added a small particle effect that follows the dogs root bone, making him feel like he is emitting from the table.

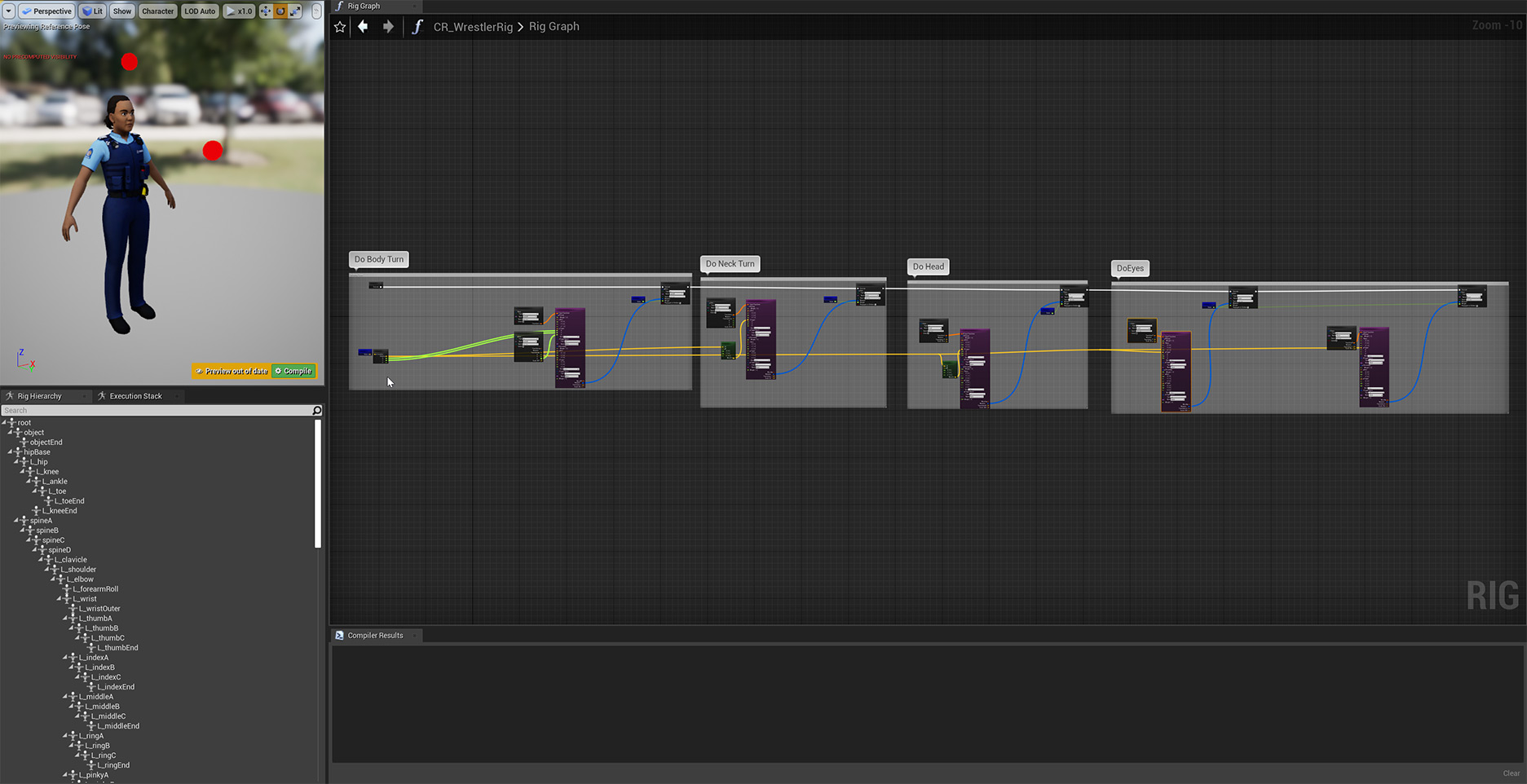

In this project, animation was crucial as it played a significant role in character-driven dialogue and storytelling. I designed an auto look system using Control Rig that enabled movement in the spine, neck, head, and eye bones. To achieve a more natural look, I gave different weights to each bone, allowing not just the head to rotate, but also the body to twist and the eyes to move more than the head. This resulted in a more convincing and lifelike animation. The following is the graph of the Control Rig used.

Here is a close up video of the auto look in isolation with the character in A-pose. I layered on-top of this the body animations and additive facial animation that gave the character a lot of life

In this project, I utilized Unreal Engine's virtual production tools to capture in-game cinematic footage for a trailer. I collaborated with the Director of Photography (DOP) to design shots using the engine's dolly and rail components. To enable real-time camera movement, I paired an iPad with a VIVE puck and recorded the desired camera shots using the positional data provided by the VIVE tracker.