IOS Augmented Reality App

March 8, 2022

Unreal Engine/ Blueprints/ Material Shaders/ Niagara VFX/ Adobe Suite/ Perforce

ATUA premiered at Sundance Film Festival 2022 and has featured in DocEdge Film Festival 2022.

The AR system was designed for a museum environment, so it had to be able to orient itself without manual setup each time. To achieve this, I employed a hybrid approach of image and world tracking. The image tracking component recognizes the four sides of the pouwhenua, enabling the digital content to be correctly oriented when the device is pointed at the pouwhenua. The application is aware of which side of the pouwhenua it is facing. Once the digital content is initialized, I switch to world tracking, which allows the user to move around the pouwhenua without having to maintain a view of a specific image. This approach provides the flexibility of world tracking while maintaining the precision of the pouwhenua's location across multiple play sessions. My testing showed that relying solely on persistent world data to initialize a scene was not precise enough.

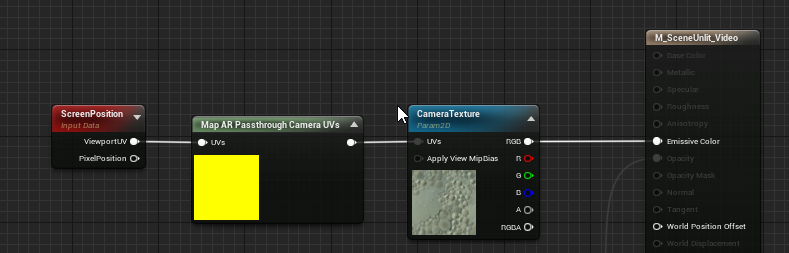

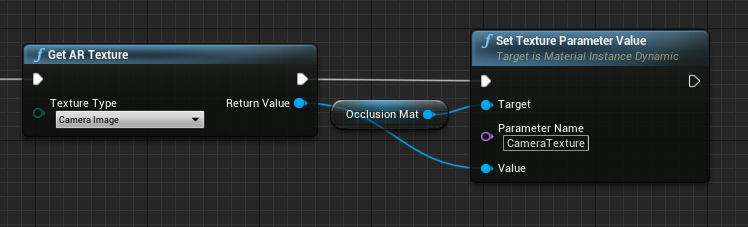

After the scene has been initialized, the four images are used to create occlusion plans that hide the character model behind the pouwhenua as the user walks around it. This is not the standard behavior as digital content is typically drawn on top of the camera feed. If available, the system uses the custom depth information provided by newer iPhones and iPads with onboard depth sensors. However, this manual approach proved to be an effective solution for devices without those sensors.

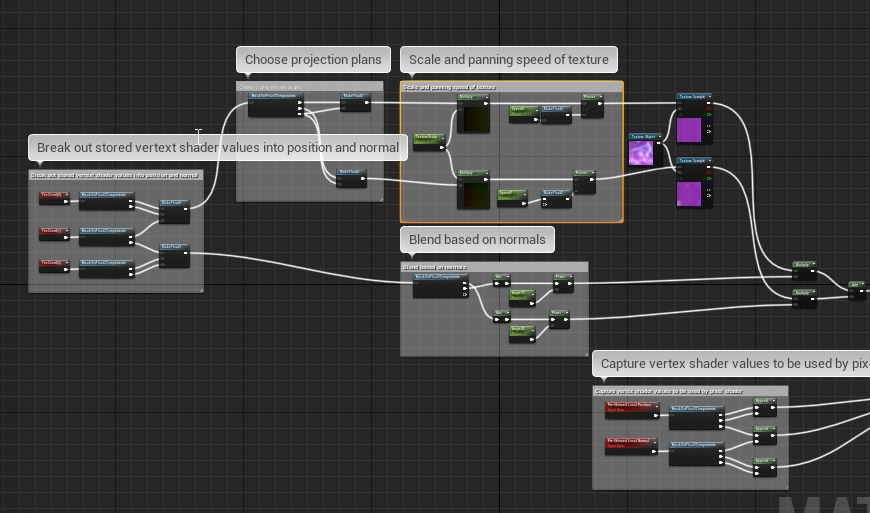

To achieve the desired material effect on the actual object, a triplanar projection method was used. The pre-skinned node was utilized to project the material, resulting in a smooth and accurate texture without unwanted stretching when the character was animated. Subtle changes in the material, for example world position offset or fresnel color and strength, were animated through sequencer to ensure synchronization with the audio.

The vertex colors contained within the pouwhenua model were used to align the spawning of particles with the symbols on it. 3D ribbons were used to render the particles with their UVs aligned to the mesh, enabling the use of a material effect to pulse and fade along the length of the ribbons. This added depth and dimension to the particle system.