Oculus Quest

Unreal Engine/ Control Rig/ C++/ Blueprints/ Material Shaders/ Niagara/ Houdini/ Blender/ Adobe Suite/ Perforce

Due to time constraints, it was determined that our animator would create hero animations as needed and utilize blend-able poses to match the guide character's dialogue for the remaining scenes. This meant that without a dynamic solution to blend between the static poses the guide character would feel static and lifeless. To make the guide feel more dynamic and alive, I designed a control rig system that would enhance the pose blends. The system utilized bone targets and spring constraints to interpolate the animations smoothly. As shown in the comparison below, the control rig (left) greatly improves the overall look and feel compared to a simple linear blend (right).

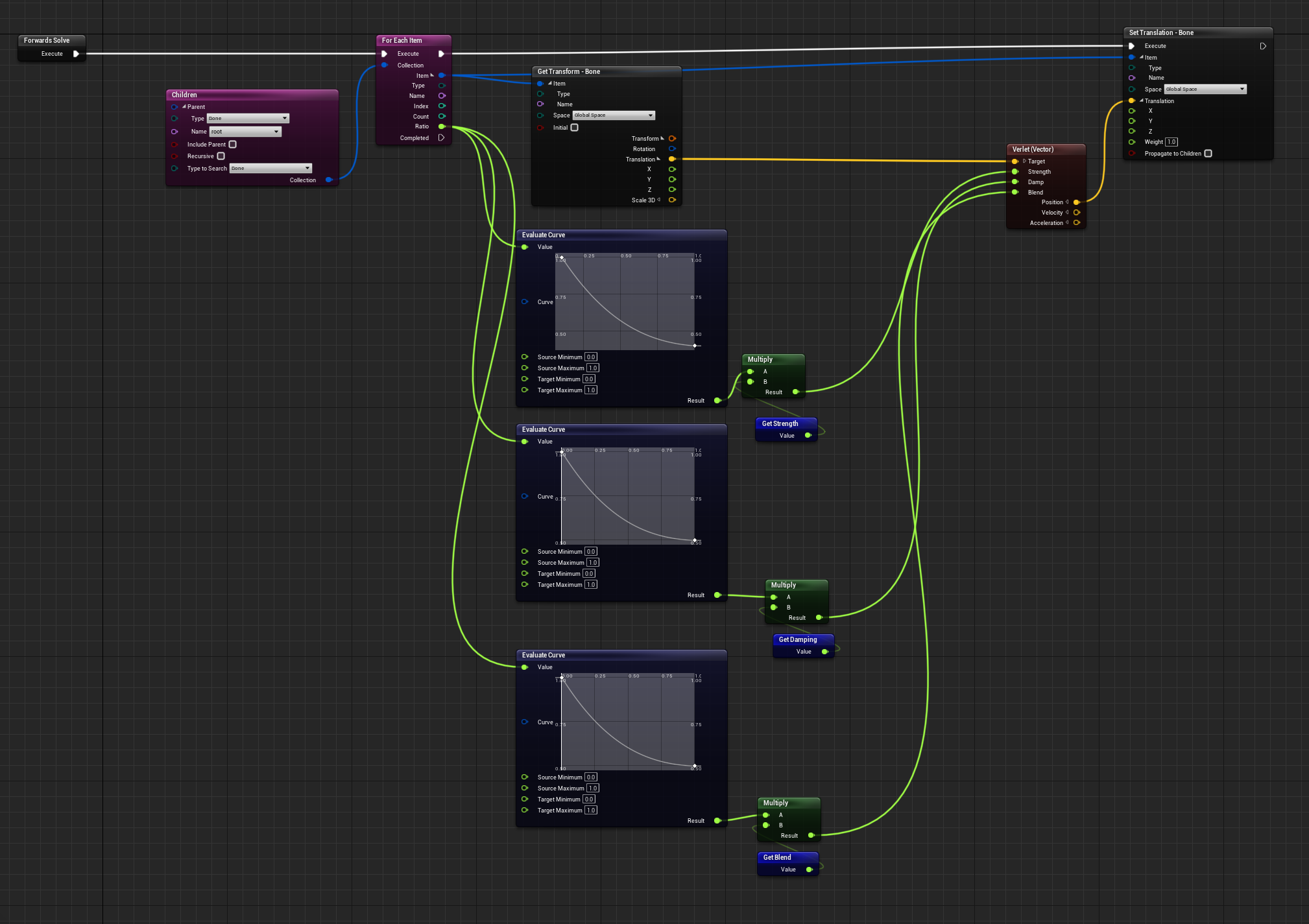

And here you can see the control rig layer that drives the spring constraint. It recieves a few vairables that can be tweaked per animation to give different stiffness results

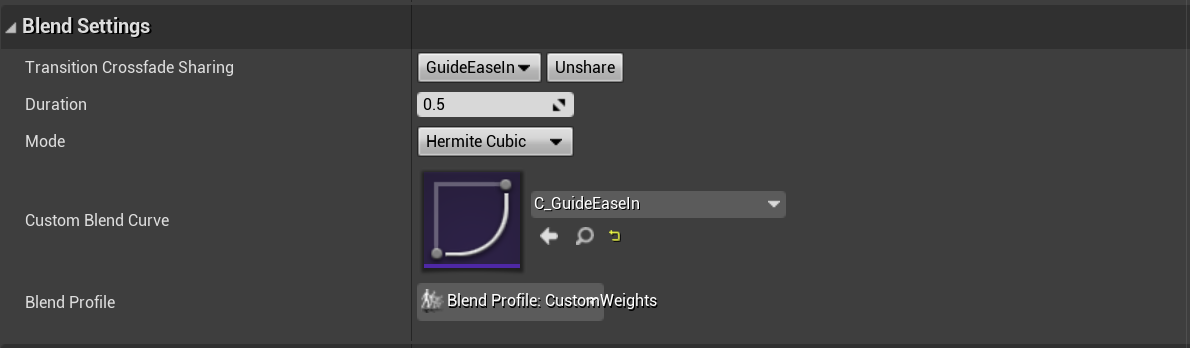

In addition to the control rig, I implemented a custom blend profile to smoothly transition the bones between poses in a non-linear fashion. Due to the linear chain structure of the rig, this approach resulted in a visually appealing "peeling" effect as the bones transitioned into the next pose.

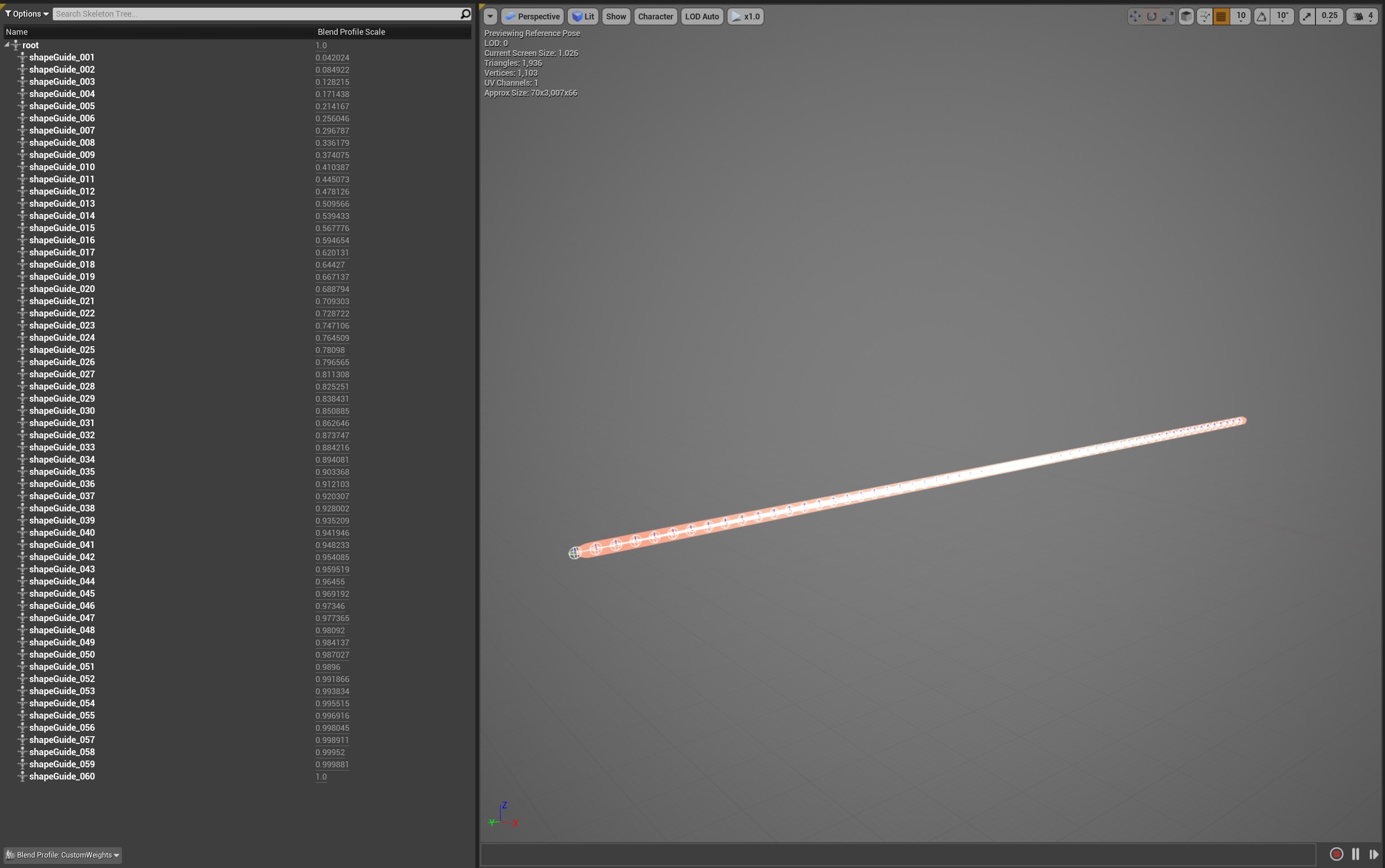

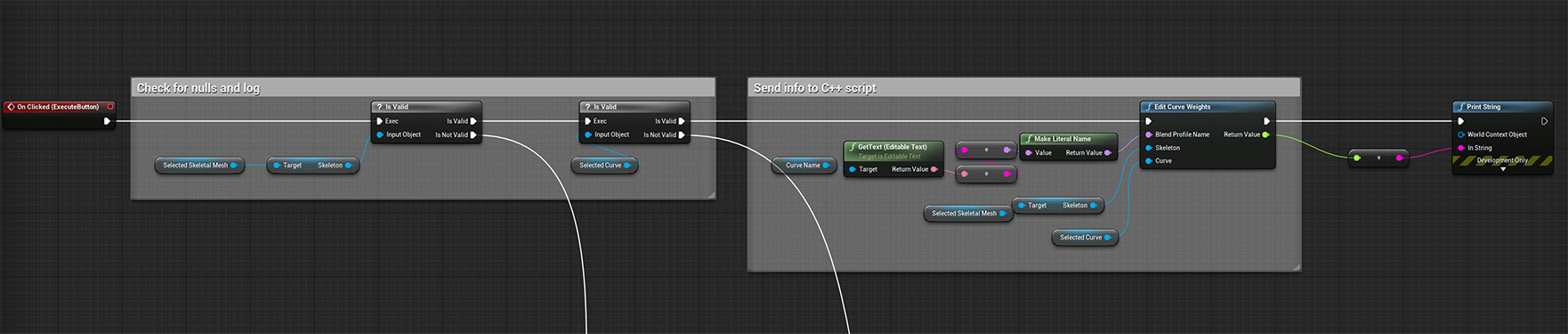

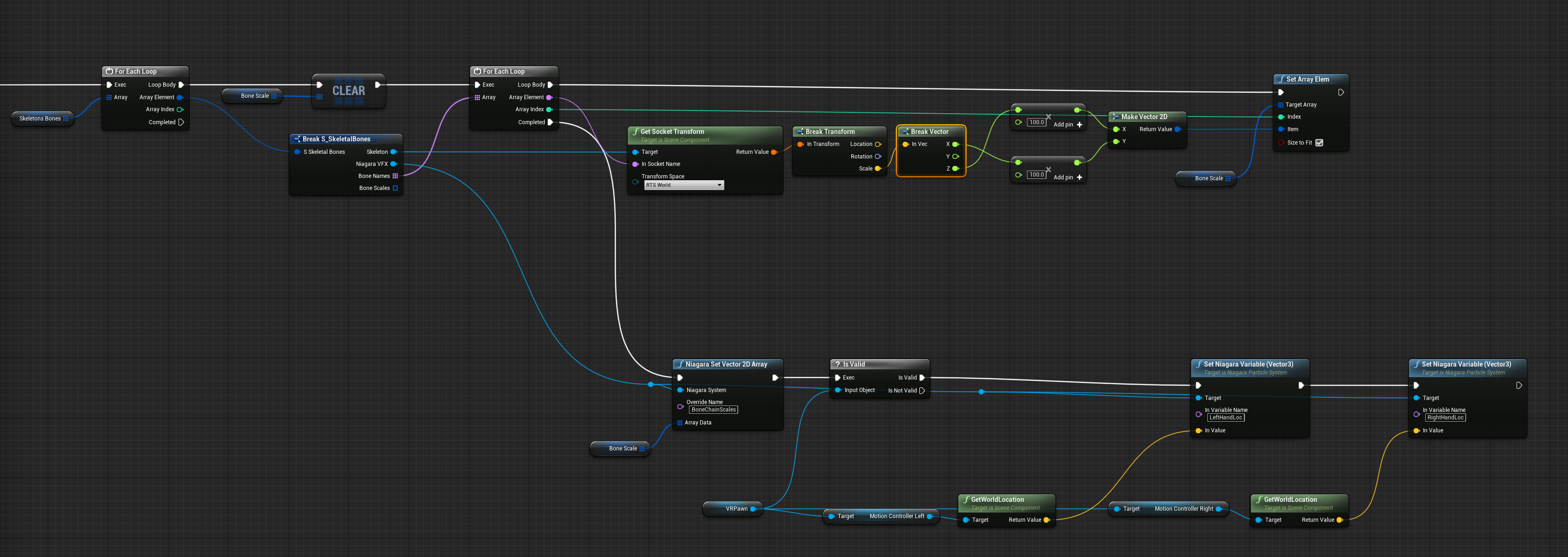

The illustration displays the skeleton with visible bone weights. Assigning these weights involved manual iteration of each bone. This process was time-consuming and inefficient. To streamline this task, I developed a custom editor tool widget that utilized both C++ and Blueprints programming languages.

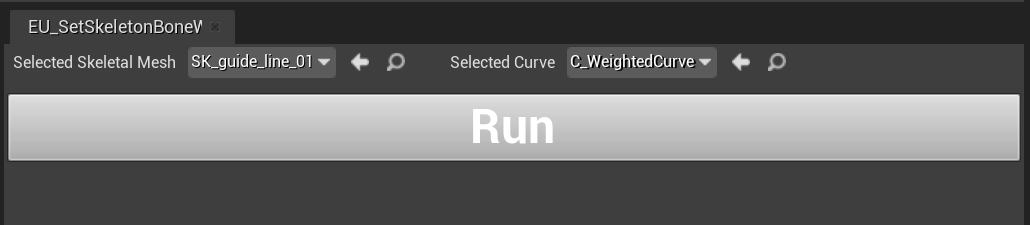

The illustration showcases the custom editor widget, designed with a user-friendly interface for selecting the skeleton to edit and the curve to use for weight assignment. The linear structure of the guide skeleton, a long chain of bones, aligned well with a curve, allowing for efficient iteration of the custom blend. By mapping corresponding bones onto the curve between 0-1, we were able to quickly adjust the blend feel to the animator's desired specifications.

This C++ correlates to the blueprint node above "Edit Curve Weights". Mixing blueprints and C++ together is extremely powerful and with unreal introducing utility widgets it makes the process of creating custom editor UI much easier

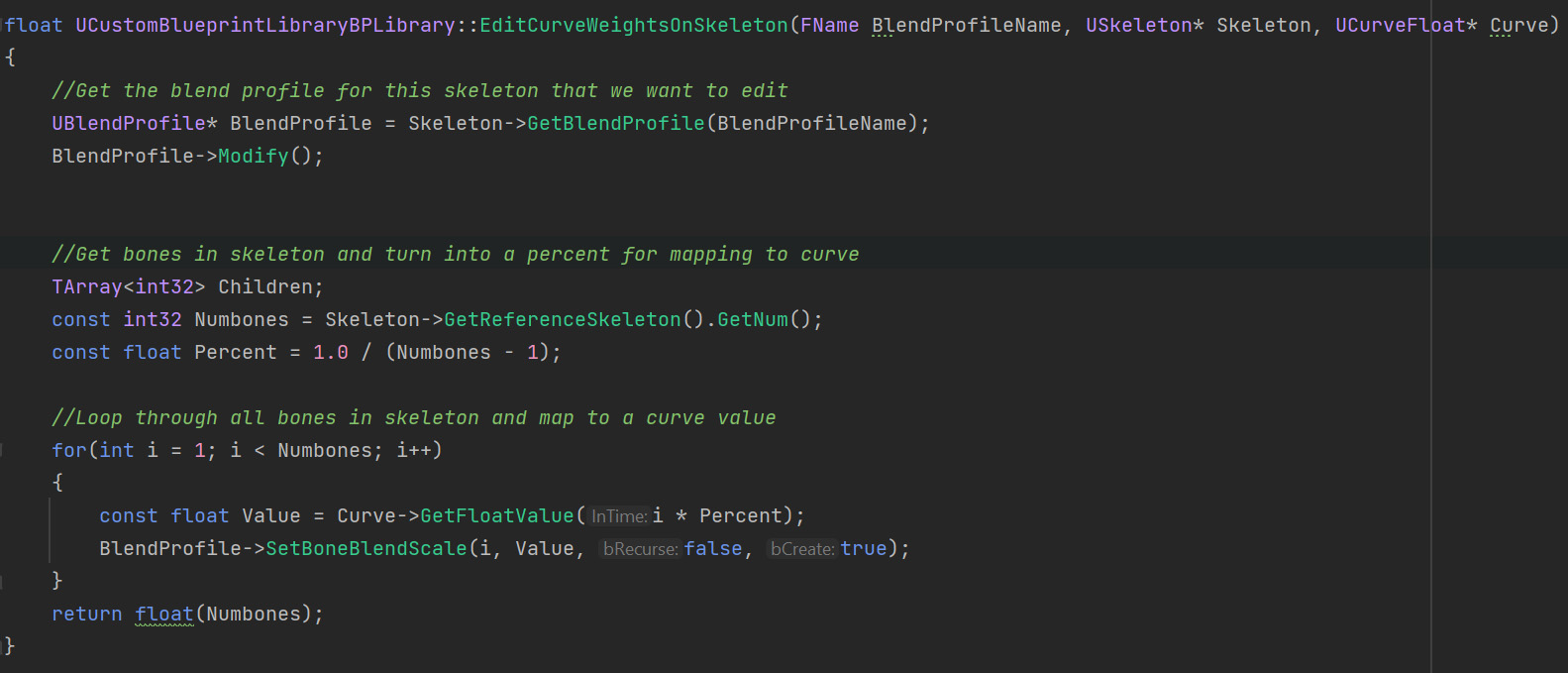

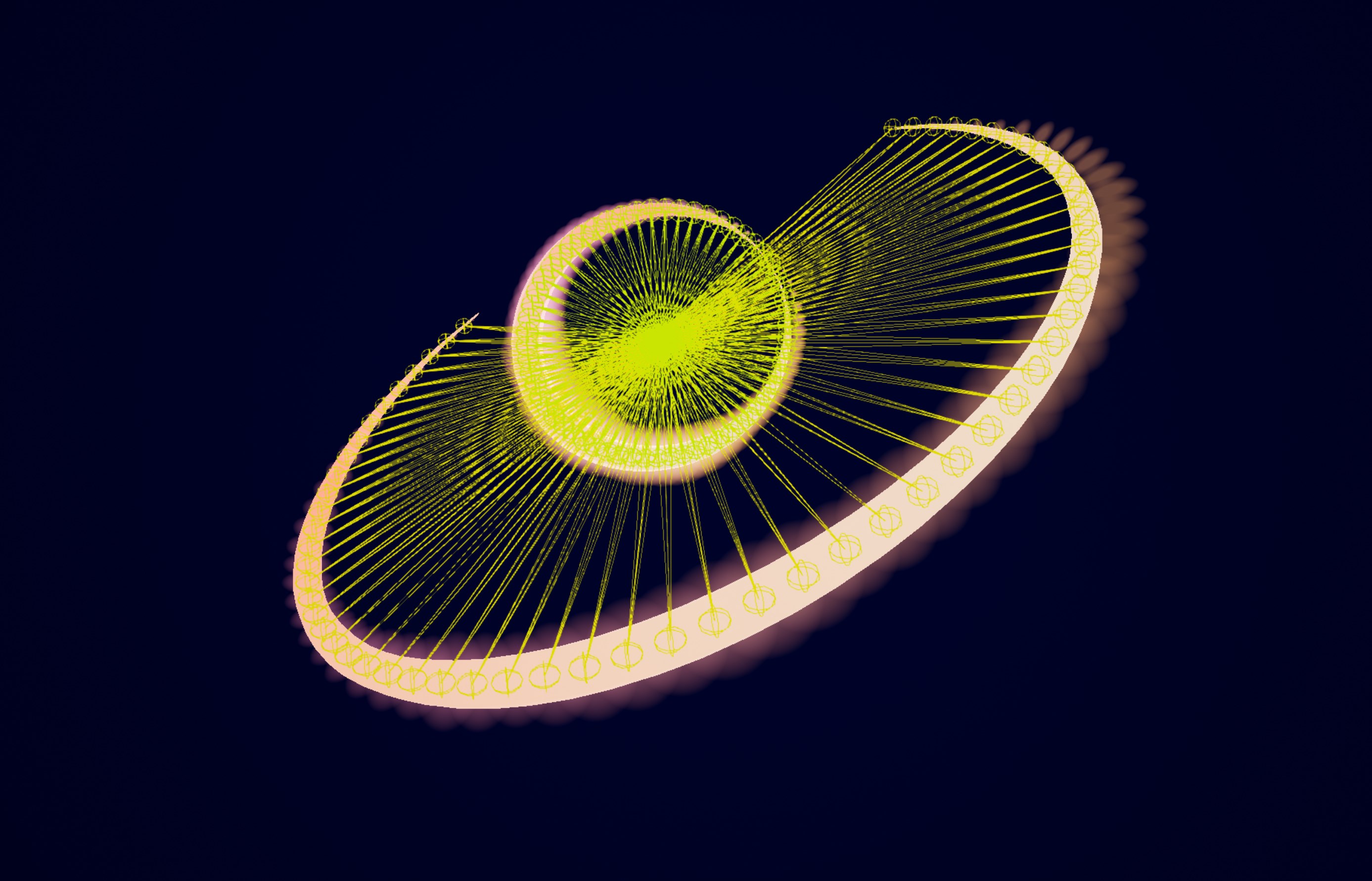

I determined that the guide character's mesh lacked dimension and softness which was what the creative lead on this project wanted. Due to technical limitations on the Oculus Quest platform, using standard post-processing effects for a glow was not feasible. To overcome this challenge, I created a particle system that spawned from the bones and utilized an additive shader to create the illusion of a glowing effect

Here you can see the code. It also feeds in the players hand positions to create a small interaction between the particle system and their hands

As the guide character didn't have a traditional mouth it was important to the creative lead that it felt like it was them speaking. This meant I needed to find a way to have the guide react to the audio being spoken in a procedural way. I was able to leverage Unreal Niagara Particle system and utilize it audio analysis features. Here you can see how the guide particle systems is reacting the music at runtime

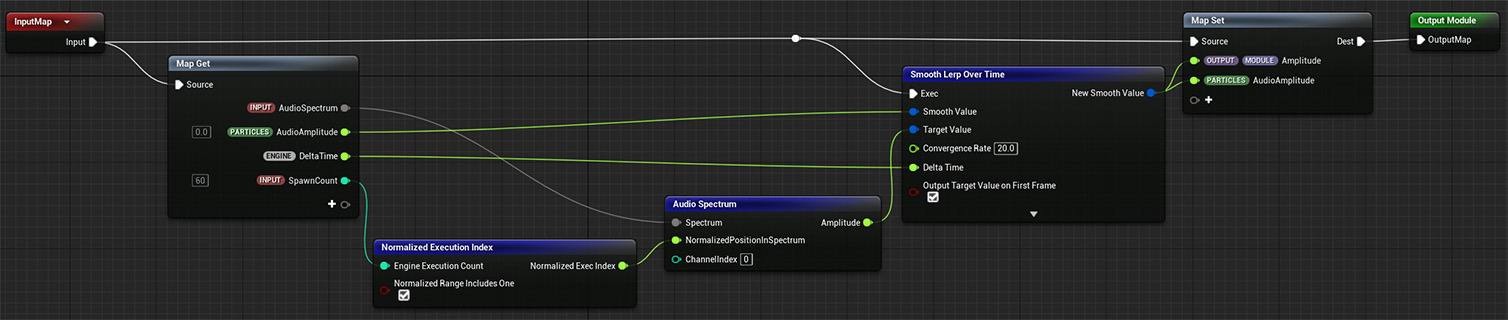

Using Niagara modules I created some custom functionality that gets the current audio amplitude and smooth lerps it over time

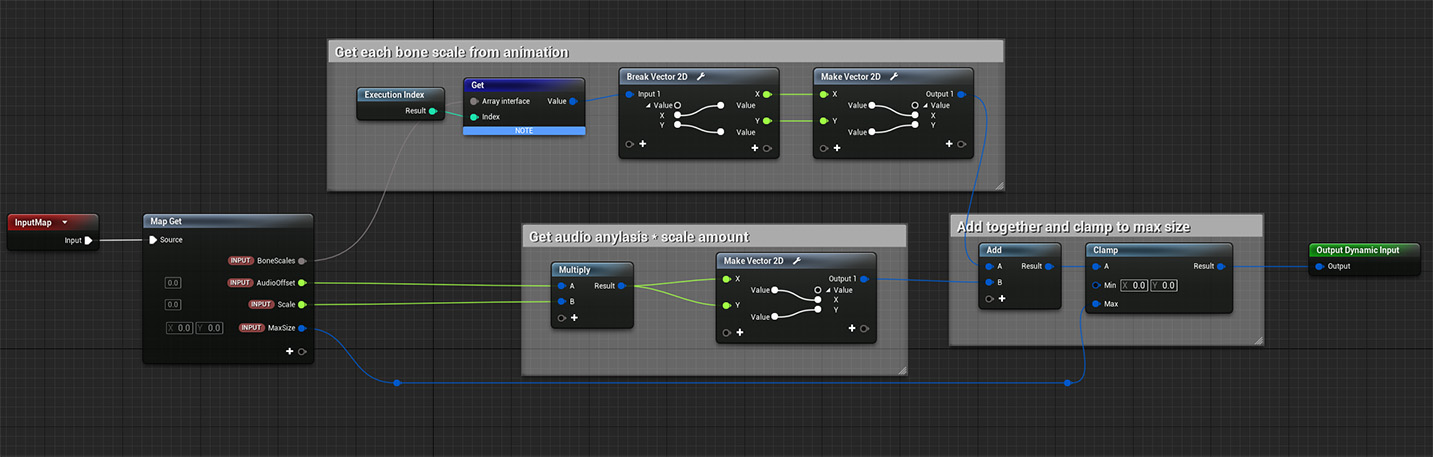

I can then get the current bone scale and add the audio amplitude to get the final particle scale

With all of these effects combined I was able to tick all the box's for our creative leads vision whilst still running on the target platform